TLDR: Use our (API) for 1 million tokens of Llama 2 70B for just $0.50. Decart built a proprietary LLM inference engine from scratch in C++ and NVIDIA CUDA to outperform other existing engines, and Cerebrium built a cutting-edge serverless compute platform. By writing kernels custom and exploiting hardware capabilities of the NVIDIA H100 GPU, we ensure low latency even at the unprecedented $0.50 price tag. Proof point already delivered with CoreWeave GPU infrastructure.

Large language models (LLMs) are becoming increasingly valuable in crafting solutions for both business and consumer use cases. However, their deployment has been hampered by many factors, such as cost and latency, making wide-scale deployment challenging.

OpenAI’s GPT-4 Turbo spans a price range from $10 to $30 per 1 million tokens, while GPT-3.5 Turbo falls between $1 and $2 per 1 million tokens. Anthropic offers Claude 2.1 at a cost between $11 and $32.70 and Claude Instant between $1.60 and $5.50. Others provide Llama 2 70B at $1-$2 per million tokens. How can we get Llama 2 70B for $0.50 per 1 million tokens (API) and still keep the latency incredibly low?

We wrote the Decart LLM infernce engine from scratch to leverage hardware capabilities introduced in the NVIDIA H100 GPU, such as thread block clusters. This allows us to maintain an incredibly low price of $0.5 per million tokens of Llama 2 70B, while keeping response times fast.

– Dean Leitersdorf, Decart CEO

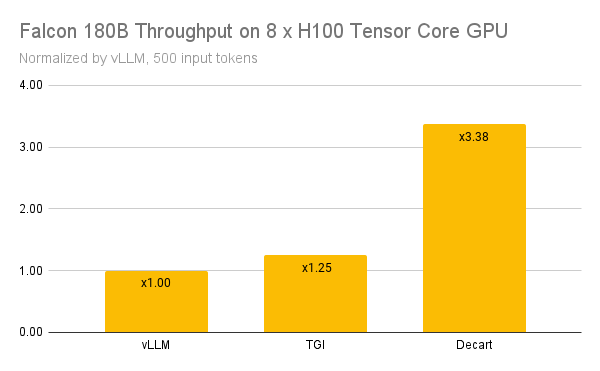

The Decart inference engine utilizes unique paging techniques in order to enable incredible efficiency even when there are hundreds or thousands of concurrent requests on a single GPU node. For instance, the typical paged attention mechanism used throughout open-source libraries is replaced by a system that uses the internal memory paging abilities of the hardware itself. This optimization provides very significant throughput speedups, even with very large models. To demonstrate this, we tested our tools on CoreWeave infrastructure to run the largest current-gen LLM, Falcon 180B by TII and achieved the following results compared to vLLM and TGI.

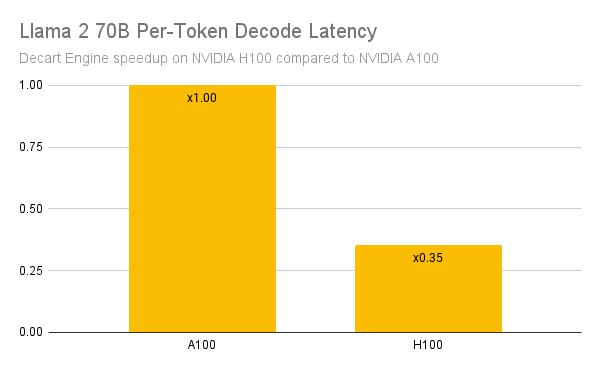

When working with Llama 2 70B, we first optimized to increase throughput to ensure we could host the API at $0.50 per million tokens. Then, it was paramount to ensure a low per-token latency. To do so, we ported our backend for Llama 2 70B from NVIDIA A100 GPUs to run on NVIDIA H100 GPUs, writing custom kernels which use the new hardware capabilities of H100 GPUs to drastically reduce per-token latency. Ultimately, the following per-token latency guarantees were achieved for Decart Engine while keeping down costs to provide the API at $0.50 per million tokens.

To access the API and test the performance, please visit Decart here. If you would like to get in touch for model requests, finetuning, private deployments, or deployments over Cerebrium please reach out.