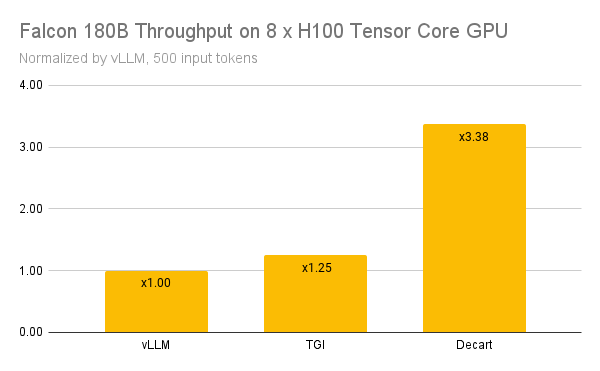

Last week, we announced our API that is currently the cheapest LLM inference API on the market by a margin of almost 2x - selling Llama2 70B at only $0.5 per million tokens. We provide simple-to-use interfaces that enable output streaming in python using libraries such as requests, openai and langchain with only a few lines of code.

Our framework is built upon an LLM inference engine we designed from scratch in C++ and CUDA, and which achieves drastically higher throughput than competing engines. The following are teasers for the insights that empower our engine – follow us on X for more soon!

- The Focus is Back to Compute: For most of 2023, LLM inference was memory-bound (both in terms of memory size and memory bandwidth), leading to solutions such as vllm. As of today, all the popular large models (e.g., Llama 2 70B, Falcon 180B) use Grouped Query Attention (GQA) to alleviate memory bandwidth constraints since the same KV cache is loaded for different query heads. Combine this with sharding over 8 GPUs that have 80GB of memory each (even when running only Llama 2 70B), and it also turns out that DRAM capacity is no longer a bottleneck as KV caches are small enough (even with huge batch sizes). Top this all off, writing custom code to utilize the incredible speed of SXM5 makes chip-to-chip communication bottlenecks also a thing of the past. Therefore, while 2023 Q2-Q3 (e.g., Llama 1) LLM inference was memory-bound , the focus in Q4 2023 is back to compute throughput.

- Input Tokens = Output Tokens: While many competing LLM APIs charge differently for input and output tokens (e.g., GPT-3.5’s rates are currently $1 per 1M input tokens and $2 per 1M output tokens), nowadays if you correctly optimize the memory accesses in your CUDA kernels then you can reach identical performance for both input and output tokens. This is possible because LLMs are now compute-bound and the compute requirements per token are similar for input and output tokens. As such, it is possible to ensure that the cost for processing either an input token or output token is roughly the same. Notice that our title says 25,000 tokens per second, it doesn’t matter if these are input or output tokens (or both), as our engine has roughly the same speed in either case.

- Speculative Decoding Won’t Save Us: While techniques such as speculative decoding (and other recent multi-token decoding techniques) can reach blazing-fast decode latency when working with small batch sizes, they can only harm the overall throughput and cost efficiency (and even the decode latency) when the server is at full utilization. When it came out, the main idea behind speculative decoding was that output tokens were more expensive to process than input tokens, and so speculative decoding would make it that several output tokens would be processed as once as if they were input tokens. However, now that it is possible to write custom kernels to process output tokens as fast as input tokens, speculative decoding now longer helps throughput. Further, it can actually hurt it, as it can only increase the number of tokens that the large model needs to process (e.g., when the speculative decoding is incorrect and the computation needs to be repeated). Thus, when LLMs are served at scale with an engine geared towards high-throughput, if the engine is running at peak usage, speculative decoding becomes hurtful.

Those are the teasers for today, follow us on X for more in depth reports soon. In the meantime, use the coupon code DECART10 to get $10 credits on our API!